Drones are often touted for their ability to benefit farmers through precision agriculture, but solving real-world problems requires a more carefully considered approach—and spinning propellers only represents a small fraction of the work to be done.

Even within the professional uncrewed aircraft systems (UAS) industry, there is a tendency to regard precision agriculture applications as a one-size-fits-all proposition. You fly your drone over a farmer’s field with a weird, expensive camera that has five lenses, plug the resulting images into an expensive piece of software, and you get a false-color map of the crop with green, yellow, and red areas reflecting plant health.

Dr. Joe Cerreta (left) and Dr. Scott Burgess traveled to Turnbull Farm in central Oregon to use UAS to study threats to the region’s hemp crop.

This, in turn, is meant to tell the farmer where more water or fertilizer is required. In theory, that’s great. In the real world, however, the problems tend to be a little more subtle and complex. That’s what I found out when I spent a day on the farm with my friends Dr. Joe Cerreta and Dr. Scott Burgess of the Embry-Riddle Aeronautical University Worldwide Campus Department of Flight.

Dave Turnbull’s farm in central Oregon is home to 8,000 lush, green hemp plants, as well as a few intruders that could ruin his entire crop.

It turns out that after practicing agriculture for the past 3,000 years, farmers have gotten pretty good at figuring out where they need more water and fertilizer. They do have urgent problems that UAS may be able to help them solve, but only if you’re willing to listen to what they actually have to say. For their study of agricultural applications of UAS, Joe and Scott listened to Dave Turnbull, a farmer in central Oregon with five acres in hemp—more than 8,000 plants, in all.

This is what he had to say: “We’ve discovered about four male plants in our crop, and that’s about average for a crop of our size. You don’t want to have any male plants in your field—they create a pollen sack which releases pollen and fertilizes all of your other plants. At that point, your plants will stop producing the flower, which is the part you are looking for in terms of CBD content.

“Not only could that damage our crops, it could damage the crops of all of our neighbors, as well, because the pollen can travel up to five miles.”

The second problem confronting Turnbull’s fragrant farm is a tiny insect called a “leafhopper.”

“The leafhopper is new to central Oregon,” he said. “Apparently, there was a small problem with it that was discovered on beets. The leafhopper picks up a virus from the beets, ingests it and then spreads it to everything else.”

Once infected, a hemp plant demonstrates a distinctive symptom called “leaf curl,” which tends to degrade production, so early detection and treatment would be a huge benefit. Finally, to add insult to injury, the leafhopper doesn’t even enjoy the taste of hemp, so after taking a bite or two, and infecting the plant, it moves on to another plant, infecting it, as well.

In order to make accurate measurements about plants based on the light being reflected from them, it is necessary to gather information about the intensity of the light falling on them. That is the purpose of the sunshine sensor located on top of the Parrot Bluegrass.

Drones are not yet as common as tractors on farms, but that may change if UAS are able to deliver useful information that help farmers make better decisions and improve crop yields.

Hunting the Hopper

No surprise: the farmer knows his crop. The real question is, can drones do anything to help with either of these problems. That was what Joe and Scott intended to find out when we arrived on a bright, sunny day at Turnbull’s farm. The hemp plants were lush and green, laid out in perfect rows separated by patches of bare soil. Meticulous attention and plenty of hard work kept the field weed-free, without the use of herbicides.

In this effort to detect leaf curl, Joe planned to deploy a Sequoia multi-spectral camera: the one with five lenses.

“What we’re trying to find out is whether a UAS with this particular camera can detect a difference between the health of these plants, and then we will conduct further analysis to see whether or not those differences can be attributed to the disease—or not,” he said.

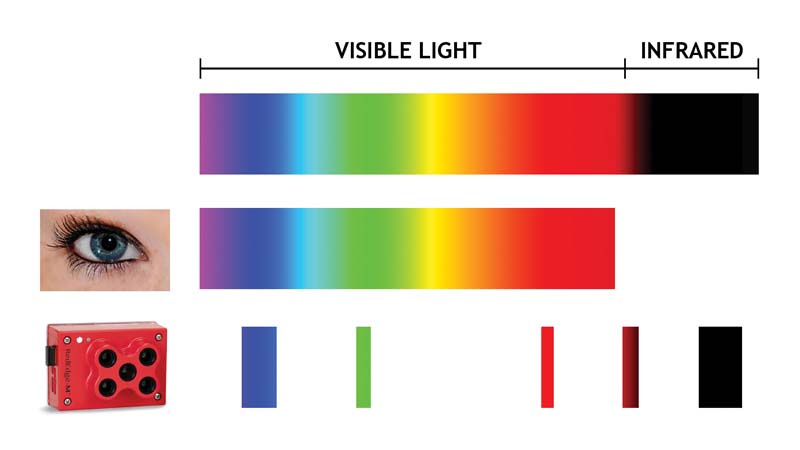

The reason multi-spectral cameras have five, or more, separate lenses is because each one detects a narrow band of light, referred to as a “spectra.” The Sequoia sensors captures images in the red, green and blue spectra, which we are all familiar with from daily life on planet Earth, as well as two that aren’t as familiar: red edge and near infrared.

The red edge spectra lies right at the limits of human perception, where the very darkest shade of the color that we know as red passes into the invisible world of infrared light. The near infrared band lies just a little further beyond that. It’s important to realize that this is not the same infrared light that is detected by thermal imaging cameras, which are carried on board drones for search and rescue missions, for example. Those detect long-wave infrared emissions, which are much further down the spectrum.

The data captured by these cameras is used to determine the health of a plant by comparing the relative level of light reflected by the plant across these different spectra. A healthy plant reflects a lot of green light—which is why plants look green to us—and absorbs a lot of red light. In the infrared spectra, they reflect an enormous amount of light. If you were an alien who could perceive near infrared frequencies, gazing upon Earth plants would be blinding.

However, a plant that is less healthy will begin absorbing more green light and reflecting more red light, which is why we perceive dying plants as turning yellow. Also, in the infrared spectra, weakened plants begin to absorb more infrared light, as well. Bad news for our crops, but good news for our alien visitors, who can now comfortably take off their sunglasses.

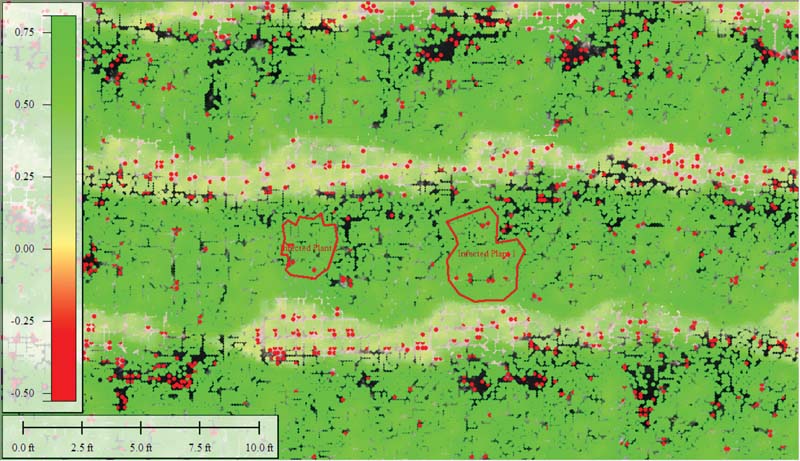

When you see one of those false-color multi-spectral images of a farm field, you know those green, yellow and red patches reveal the ratios between the different spectra of light being reflected by the plants and, thus, their health relative to one another.

The success of Joe’s idea, to use a multi-spectral camera to detect leaf curl, would depend on two things. First, does the disease process reduce the health of the hemp plant sufficient to alter the relative level of light being reflected from it across these different spectra? Second, does it impact the plant in such a way that it creates a unique multi-spectral signature?

Whereas the human eye perceives colors across the entire visible-light spectrum, multi-spectral cameras detect only narrow bands of light—called “spectra”—as well as several additional bands in the near infrared spectrum.

Seen here in a magnified photograph, the leafhopper is a cousin to the much larger grasshopper. Its primary threat to crops lies in its ability to transmit diseases between individual plants.

A DJI Inspire 1 equipped with a ZenMuse XT thermal imaging camera surveys a hemp farm in central Oregon, as part of research performed by Embry-Riddle Aeronautical University.

A Different Light

If you were hoping for definitive answers to those two questions, I have some bad news for you: actual science is a time-consuming process. The flying, which only took a few hours in this case, is the easy part. The real work began once Joe got back to the lab. Of course, when you do get back to the lab, it’s nice to know that your data is reliable.

An essential piece of that puzzle is understanding that the light reflected off of the plants is affected by the light falling on the plants. The “color temperature” of the sun’s light—that is, its hue—changes depending on whether the sun is directly overhead or low on the horizon, whether the sky is clear or overcast, and whether there is dust, smoke or haze in the air.

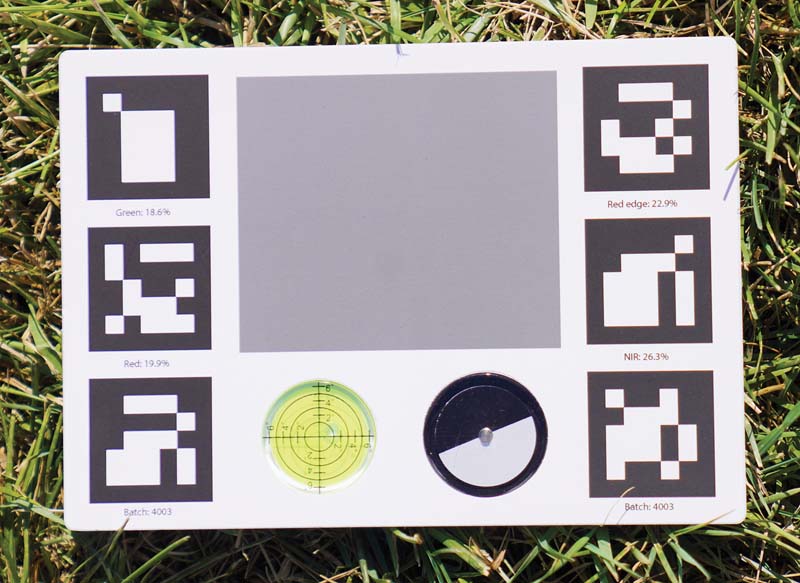

If you don’t account for the quality of the light when you capture multi-spectral images, it is impossible to make meaningful comparisons across multiple measurements through time. Fortunately, there are a pair of solutions that help us solve this problem.

First, on top of an aircraft carrying a multi-spectral camera, you will typically find an opaque square of white plastic, which is the sunlight sensor. During the flight, it collects data regarding the intensity of the sunlight falling on the crops to that data can be normalized for the intensity of the light.

Second, before each flight you should calibrate the multi-spectral camera using a “reflectance board.” This accessory is basically a plastic board that comes with the aircraft and provides a known color value. By comparing the result of the calibration image captured in the field to this established target, the camera is able to account for changes in color temperature caused by the local environmental conditions.

In addition to the work Joe was doing to use multispectral imaging as a solution for identifying leaf curl, Scott wanted to test an entirely different approach: visible light imaging. Like it says right in the name, is virus has a visible manifestation: the leaves curl up in a distinctive manner.

Using a DJI Mavic 2 Enterprise Dual for its visible-light capture capabilities, he flew a conventional orthomosaic mapping mission capturing hundreds of individual photos of the field. Back in the lab, he uses Pix4D to combine them into a single, seamless image.

“Once we’ve got that put together, the hope is that maybe we’ll be able to apply some sort of artificial intelligence, to be able to sense where those things are,” explained Scott.

Dr. Joe Cerreta holds a Parrot Bluegrass drone above a reflectance board to calibrate its multi-spectral camera system.

Flying is just one small part of doing science with drones. Analyzing and understanding the data that they capture is the more complex and time-consuming part of the work.

A reflectance board is included with the Parrot Bluegrass agricultural survey drone, to calibrate its multi-spectral camera based on current lighting conditions.

Images captured using a multi-spectral camera can be used to create Normalized Difference Vegetative Index (NDVI) maps of crops. In this preliminary result, scientists from Embry-Riddle Aeronautical University seek to distinguish between plants infected with a virus and their healthy neighbors.

Late in the growing season, male hemp plants reveal themselves by the appearance of pollen sacks. If they are allowed to burst, they can fertilize other hemp plants up to five miles away and degrade crop yields.

The Heat is On

Of course, finding a better way to combat leaf curl was only one of the missions that farmer Turnbull had set for my Embry-Riddle colleagues. The second was to find a way to identify male hemp plants, so that they could be removed before they released their pollen into the air, potentially ruining not only Turnbull’s crop, but those of his neighbors, as well. This was another project that Scott took on during our day on the farm.

The male plants tend to reveal themselves very late in the season, “he said. “From what we’ve heard in field reports, the male plants will actually change their temperature by a degree or so.”

In this case, we’re talking about the actual, physical temperature of the plant, like you would measure with a thermometer — as opposed to color temperature. This required a third type of sensor: a thermal imaging camera, which reveals differences in heat the same way a conventional camera reveals differences in light.

These are widely used in public safety applications, to reveal the presence of a person who is lost or hiding by their emitted body heat.

To achieve specific, reliable results, Scott would need to deploy a radiometric thermal imaging camera. “Radiometric” sounds like something a frantic scientist might be shouting about 30 seconds before Godzilla breaks the surface of Tokyo Bay: “Radiometric readings are increasing rapidly! We must notify the prime minister!” However, all it really means is the ability to take measurements at a distance.

A radiometric thermal camera can reveal the temperature of objects in the environment, not just whether they are warmer or colder than the surroundings. If you’re looking for a missing hiker, it’s enough to see their body heat against the snowy backdrop. However, if you’re looking subtle variations in the temperature of individual plants in a hemp field, you might want to know their actual temperatures.

Ironically, the hardware employed in radiometric and non-radiometric cameras is identical. The difference—and the added expense—comes from the fact that the radiometric camera has been painstakingly calibrated so that the temperature value it returns for each pixel is reliable, within established parameters.

Flying is just one small part of doing science with drones. Analyzing and understanding the data that they capture is the more complex and time-consuming part of the work.

Using a collection of two-dimensional photographs along with photogrammetry software from Pix4D, the Embry-Riddle faculty were able to create a 3D map of the farm down to the level of each individual plant.

The Embry-Riddle scientists deployed the Mavic 2 Enterprise Dual for its visible-light capture capabilities. Its built in thermal imaging camera lacks the resolution that would be required to look for temperature variations between the plants.

Flying for Science

To gather thermal data, Scott deployed a DJI Inspire 1 with a ZenMuse XT camera gimbal, even though it might be too limited for the type of data he was hoping to gather.

“One of the issues we have is getting the right sensor,” said Scott. “In some cameras, that radiometric capability is right in the center of the sensor, so you’ve got to directly above that male plant in order to get a result. It would be better to have a sensor with a broader perspective with that radiometric capability.

“Carrying that type of sensor requires a different aircraft, so that why this becomes a longer-term research project. We need to be able to obtain the all of the right equipment — and that takes time.”

Scott hoped that the his preliminary flights during the day of our visit to Turnbull Farm would guide those future efforts, by helping to establish the best altitude above the plants to fly a thermal survey mission, for example.

Once again, our trip to the hemp field had proven that even with a fleet of drones equipped with different types of sensors, solving the real-world problems of farmers takes more than technology — it requires an understanding of the issues they face and how, or whether, that technology can deliver useful results.

Text & Photos by Patrick Sherman