In the drone industry, it is taken nearly as gospel that small uncrewed aircraft systems (UAS) will allow people to do their jobs better, safer, and faster than whatever the traditional method happens to be—especially when it comes to public safety. However,

if you are making decisions that have financial consequences or lives on the line, it isn’t enough to believe it. You must be able to prove it. That’s the job of researchers like Dr. Joseph Cerreta at the Embry-Riddle Aeronautical University (ERAU) Worldwide Campus: to build the case through the scientific process that drones really can deliver results comparable to legacy systems, which have demonstrated their reliability through decades of service.

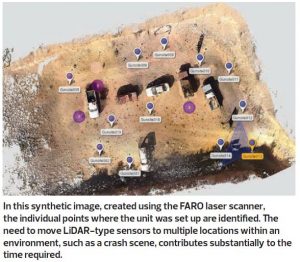

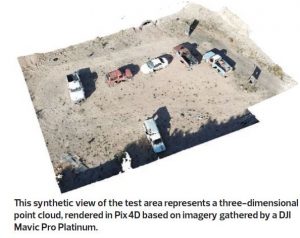

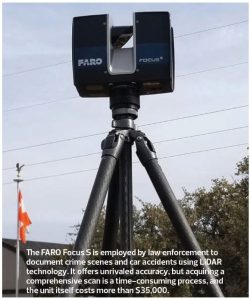

Specifically, Dr. Cerreta wanted to see how 3D models created by Pix4D using drone imagery would stack up against a portable, tripod-mounted laser scanner from FARO. Established in 1981, FARO is a global leader in precision 3D measurement and is widely used by law enforcement to create accurate representations of crime scenes and automobile accidents that can be submitted as evidence in court.

Dr. Cerreta selected Gunsite, a civilian firearms training facility in Paulden, Arizona as the location to conduct his research. The 3,000-acre facility is dotted with outdoor shooting ranges, including one that is home to bullet-riddled cars where students practice their vehicle defense skills.

“We selected this site because it has a lot of vehicles in a small area,” said Dr. Cerreta. “It combines elements you would expect to see both at a crime scene, like bullet holes, and an automobile accident, with parts strewn around the location. Also, it’s an austere location in uncontrolled airspace, so doing our work here reduces the risk both to bystanders and other air traffic.”

LASER BATTLE

The Embry-Riddle WorldwideCampus faculty provides classes for law enforcement officials, who want to understand how to integrate drones into their work, according to Dr. Cerreta.

“They have been asking us a lot of questions about which types of drones are the most accurate, and how they should fly them in order to construct an accurate point cloud,” he said. “We needed to do this research so that we could answer those questions with confidence.”

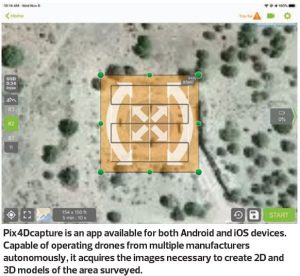

Dr. Cerreta needed to test three specific points: First, which of the small, commercial Pix4Dcapture, he tasked each drone with completing the same series of flights: a single grid, a double grid, a circle, and a double grid plus a circle. Then, he performed those same patterns at five different altitudes: 82, 100, 150, 200, and 250 feet above ground

level (AGL). The lone problem child among the assembled drones was the Mavic 2 Enterprise Dual. Embry-Riddle purchased the Dual with DJI’s smart controller, which features an integrated, Android-based tablet. Dr. Cerreta wasn’t able to load Pix4D onto the controller, so he did his best to replicate the same flight profile using the DJI Pilot app.

Operating autonomously, each of the drones performed the same flight, capturing dozens of individual still images. Although these were two-dimensional photographs, the advanced photogrammetry tools within the Pix4D software suite allowed it to construct a 3D model of the site from the data. This yielded a “point cloud”: millions of individual points, their coordinates in three-dimensional space inferred from the photographs. Using a fundamentally different technique, the FARO laser scanner also created a point cloud from the scene—making it possible to compare the results from the drone and the

Operating autonomously, each of the drones performed the same flight, capturing dozens of individual still images. Although these were two-dimensional photographs, the advanced photogrammetry tools within the Pix4D software suite allowed it to construct a 3D model of the site from the data. This yielded a “point cloud”: millions of individual points, their coordinates in three-dimensional space inferred from the photographs. Using a fundamentally different technique, the FARO laser scanner also created a point cloud from the scene—making it possible to compare the results from the drone and the

scanner, head to head.

A GAME OF INCHES

Dr. Cerreta presented his results in a peer-reviewed paper entitled “UAS for Public Safety Operations: A Comparison of UAS Point Clouds to Terrestrial LiDAR Point Cloud Data Using a FARO Scanner,” co-authored with Dr. Scott Burgess and UAS undergraduate student Jeremy Coleman from the Embry-Riddle Prescott campus. The accuracy of the drone data was derived by employing Aeropoints ground control points and comparing the point cloud that Pix4D rendered for each flight with the point cloud created using the FARO scanner data.

Overall, the two data sets were quite similar. On average, the drone data varied between 30 to 35 millimeters (just slightly more than one inch) from the results derived from the laser scanner. The best results were delivered by the DJI Phantom 4 Pro, which had a 26-millimeter variation from the FARO data, whereas the venerable Parrot Bebop 2 performed the worst overall, with a 39-millimeter variation.

“Even though UAS are not as accurate as a terrestrial LiDAR system according to our findings, they are, practically speaking, within a reasonable level of accuracy for law enforcement to be able to use drones to document crime scenes or automobile

accidents,” Dr. Cerreta explained.

Just as important as the variation between different types of aircraft was the impact of different flight patterns on the final results. Of all the altitudes he tested, Dr. Cerreta found that 100 feet AGL yielded the best results. Flying at 82 feet or 150 feet AGL had a small but measurable impact on the data, while flying at 200 feet AGL and above had a much more significant negative impact. Also, the double grid plus a circle proved to be the best flight pattern at every altitude, making it the preferred alternative for public safety drone operations.

ADVANTAGE: DRONES!

Beyond their proven accuracy relative to their ground-based competitors, drones have some key benefits. According to Dr. Cerreta’s paper, capturing a scene with a drone takes 80 percent less time than using a ground-based scanner like the FARO. That means police officers spend less time in harm’s way, such as standing on the side of a busy road to document a crash. In addition, drones are much, much less expensive than LiDAR systems. A Phantom 4 Pro with a nice assortment of accessories can be purchased for less than $2,000, whereas a FARO laser scanner can cost upward of $50,000. That puts detailed crime scene investigation capabilities into the hands of smaller law enforcement agencies that might not be able to afford a terrestrial system. Thanks to the hard work of Dr. Cerreta and his colleagues, we no longer need to take it on faith that drones make it safer, easier, and faster to perform this one type of law enforcement task using drones. We’ve got the peer-reviewed paper to prove it.

Photogrammetry: How it works

Initially conceived by Albrecht Meydenbauer in 1867, photogrammetry is the science of extracting reliable measurements and information about the physical world from photographs, such as the distance between two points on the ground, or the height of a building. A common example of photogrammetry using drones is orthomosaic mapping.

In this application, the drone flies a fixed pattern over the landscape, taking still photographs with its camera at regular intervals while fixed in a nadir orientation—that is, pointing straight down at the ground. By capturing overlap between neighboring images, a computer is able to match up the individual photos and assemble them

into a single picture, like a tile mosaic. The result resembles a conventional, two-dimensional map with a consistent scale.

Using stereoscopic techniques, it is also possible to build a three-dimensional model using photogrammetry. This technique was done by hand during World War II, to present British Prime Minister Winston Churchill with an accurate model of a V-2 rocket sitting on the launch pad, convincing him of the danger posed by the Nazi

“vengeance weapons.” The process involves complex mathematics, but is well within the capabilities of modern computers.

LiDAR: How it works

In the same way the common word radar is actually an acronym for “radio detection and ranging,” LiDAR is an acronym for “light detection and ranging.” The basic principle behind LiDAR is that the speed of light is a well-known, universal constant. If you measure exactly how long it takes a beam of light to reach an object and then reflect back to its source, and you multiply that result by the speed of light, you know the distance between those two objects with high precision.

Typically, lasers, rather than ordinary light, are used in LiDAR systems to deliver pinpoint accuracy. Commercial LiDAR systems, like those built by FARO, employ an array of spinning lasers that sweep across the environment and rapidly build up an accurate 3D model. Of course, objects in the environment shadow objects located behind them, so scans need to be taken from several different positions to build up a complete model—a time-consuming process. When all of the data have been collected, those billions of individual measurements are loaded into a computer that processes them into a model of the environment that is accurate down to the millimeter. This model is referred to as a “point cloud” because it defines shapes through the locations of millions of individual points in three-dimensional space. To aid human comprehension, point clouds are often overlaid with photographs from the scene to create a recognizable virtual environment.

Text & photos by Patrick Sherman, an adjunct faculty member at the Embry-Riddle Aeronautical University Worldwide Campus.