The next time you are out flying, ask yourself: what am I looking at? According to a study by Embry-Riddle Aeronautical University, there’s a pretty good chance that it isn’t your drone—but augmented reality has the potential to help you do a better job of focusing on your aircraft, which is really important, as this excerpt from Part 107 shows:

14 CFR § 107. 31: Visual line of sight aircraft operation.

A. With vision that is unaided by any device other than corrective lenses, the remote pilot in command, the visual observer (if one is used), and the person manipulating the flight control of the small unmanned aircraft system must be able to see the unmanned aircraft throughout the entire flight in order to:

1. Know the unmanned aircraft’s location

2. Determine the unmanned aircraft’s attitude, altitude, and direction of flight;

3. Observe the airspace for other air traffic or hazards; and

4. Determine that the unmanned aircraft does not endanger the life or property of another.

One type of augmented reality (AR) commonly used in crewed aviation is the Heads-Up Display, which provides pilots with immediate access to crucial flight data, without having to look down at the aircraft instruments inside the cockpit. (Photo courtesy of Telstar Logistics)

As this regulatory excerpt makes abundantly clear, maintaining visual line of sight (VLOS) with your aircraft is a core responsibility of the Remote Pilot In Command (RPIC), along with his or her air crew. However, it’s fair to ask whether or not most commercial operators strictly adhere to it. I’ll confess that I basically never meet this standard. I’d be willing to bet that you don’t, either, but don’t worry, your secret is safe with me. Also, it isn’t really a secret.

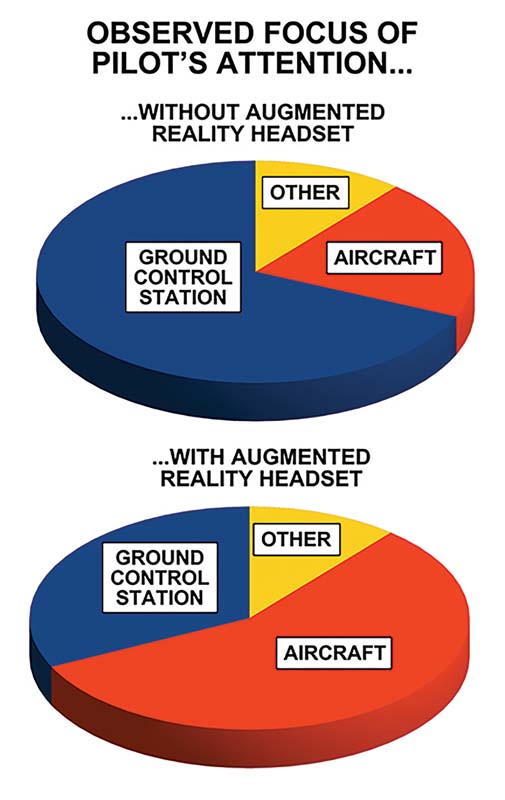

The truth is, we’re in good company. During a field study conducted by Jeff Coleman and David Thirtyacre for the Embry-Riddle Aeronautical University Worldwide Campus Department of Flight, the school’s own faculty—some of the most skilled and highly trained UAS pilots in the world—were observed to spend nearly 70 percent of their time focused on the aircraft’s ground control station (GCS), rather than the aircraft itself.

For anyone who has ever flown a small uncrewed aircraft system (sUAS), the reason is obvious: the GCS is where the action is! By looking at your drone, you are able to determine its attitude, direction of flight and estimate its altitude. However, by looking at your GCS, you are able to see its real-time video downlink, as well as determining with precision its location, altitude, distance and direction to the launch point, horizontal and vertical speed, GPS receiver and sensor feedback, battery power remaining, payload function and status, radio signal strength and may other factors.

If we were flying in an environment somehow guaranteed to be free of any hazards—no other aircraft, obstructions, people or sensitive property anywhere in the vicinity—we would probably stare at our GCS display 100 percent of the time, because the information that it provides is so valuable. However, we do not live in such an environment, which is why the FAA puts such a strong emphasis on maintaining VLOS with the aircraft: it’s the only way to guarantee we aren’t flying into trouble.

The results of this field study indicated a dramatic shift in the behavior of remote pilots when equipped with an augmented reality (AR) system, substantially increasing the quantity of time spent maintaining direct visual line of sight (VLOS) with their aircraft.

A Better Way?

When Coleman and Thirtyacre initiated their research, it wasn’t aimed exclusively at making their colleagues look bad. Instead, they wanted to see if augmented reality (AR), provided by the Epson Moverio BT-300 and BT-35E smart eyewear system, could enable more faithful VLOS operations while simultaneously giving the pilot access to all of the video and telemetry provided by the GCS.

Wearing a pair of Moverios superimposes the display you would normally see on your GCS over the real world, allowing you to see it and your aircraft simultaneously. The results are not dissimilar to the heads-up display (HUD) in modern jet fighters.

The question the researcher sought to answer was: will having this HUD available change the behavior or pilots flying autonomous missions? Thirtyacre described the process of gathering the data, beginning with the fact that each pilot was asked to fly two comparable autonomous missions: one using a conventional GCS and the other wearing the Moverios.

“We videotaped people flying over a period of four days, and we took that data away and analyzed it,” he said. “Based on the position of their eyes and head, we made a judgment about whether they were looking at the GCS, the aircraft, or something else. Because this was a field study, not an experiment, it’s important to understand that we couldn’t control for all of the variables. We could only document what happened, and base our judgment on that.”

The Moverio augmented reality (AR) glasses from Epson incorporate a binocular 720p display, a 5-megapixel integrated camera, and WiFi and Bluetooth connectivity.

Weighing just 2.6 ounces, the Epson Moverio augmented reality (AR) glasses are comfortable to wear for extended periods of time and a bright enough to use in full daylight with the included sunshades.

One big example of an uncontrolled variable was the pilots themselves: fully aware of the fact that they were being studied and videotaped, did they, even subconsciously, change their behavior in a way that they believed their peers would approve?

With the field observations complete, multiple judges were assigned to watch each pilot fly, to ensure a reliable measure of time spent looking at the aircraft, the GCS and elsewhere in the environment, such as speaking with a colleague.

The results were striking, according to Thirtyacre: when flying with a conventional GCS setup, even these experienced pilots spent more than two-thirds of their time looking at the display rather than their aircraft.

Thirtyacre concluded: “As RPICs, we spend a lot more time looking at the GCS than we do at the aircraft — a whole lot more than anybody thought. The amount really surprised me. We teach people to maintain VLOS while they are positioning their aircraft in the sky in the general vicinity of where they want it, and then look down at the display—but that isn’t what they are doing, at least according to this study.”

This simulated image provides a glimpse of what it is like to operate a drone using Epson’s Moverio smart glasses. As the pilots in this study gained experience using the augmented reality (AR) technology, they found it best to keep the aircraft in one corner of their field of view, where it was less likely to be lost in the clutter of the video and telemetry display.

David Thirtyacre tries out the Moverio augmented reality (AR) glasses from Epson as a supplement to the conventional ground control station display used by the overwhelming majority of remote pilots. (Photo courtesy of Embry-Riddle Aeronautical University)

Advantage: Augmented Reality

When the pilots flew a comparable mission using AR technology, the results were dramatic: very nearly the reverse of the previous test. Wearing the Moverios, the pilots spent more than half of the time looking up at the aircraft. For Thirtyacre, this was an important insight—one that will require further research to confirm, but also one that hinted AR might have an important role to play in the future of UAS operations.

“In accordance with Part 107, we need to maintain VLOS with the aircraft. Does hearing the aircraft behind me while looking at the GCS constitute VLOS? I don’t think so,” he said. “I think it is very important that we understand where the aircraft is and the environment around it. If you’re not looking at your aircraft, how do you know you’re not flying over people? How do you know where or not there are power lines nearby?”

One question Thirtyacre would like to see addressed by a future study is the question of “dwell time.” That is, how long are the uninterrupted stretches pilot spend looking at the GCS display, before visually checking in with the aircraft.

“Manned pilots are constantly scanning the environment while they are flying. They periodically glance down at their instruments, but that interval is measured in seconds,” he said. “My guess is that we’ll find people stare at the display for two, three or four minutes at a time. We need to move toward an approach that more closely resembles what happens in manned aviation.”

Dr. Scott Burgess of the Embry-Riddle Aeronautical University Worldwide Campus depart of flight looks up at his aircraft through a pair of Epson Moverio augmented reality (AR) glasses. (Photo courtesy of Embry-Riddle Aeronautical University)

Virtual reality (VR) users completely immerse their vision and hearing in a synthetic world by means of a head-mounted display (HMD).

Choose Your Own Reality

Like it or not, we all live in the same reality: all of us exist under the effects of Earth’s gravitational field, atmospheric chemistry, diurnal cycle and our own innate biology. The world rolls on, and will until the heat death of our sun in about five billion years, in full compliance with the laws of physics. None of us can change that — however, we can change the way it looks. Here are some options:

Augmented Reality (AR)

By superimposing visual information between us and the real world, AR allows us to simultaneously perceive the world around us, augmented with relevant content displayed by a computer system. The first practical, widespread use of AR took the form of Heads-Up Displays (HUDs) in military aircraft, which display the aircraft’s weapons status, attitude, altitude, heading, remaining fuel, radar target lock and other crucial flight information on a transparent screen located directly in the pilot’s line of sight.

The technology was actually pioneered during the Royal Air Force during World War II and has since become a universal fixture on all military and even some commercial aircraft.

Epson Moverios employed by Embry-Riddle researchers in this study provide a HUD-type capability for drone pilots, by projecting their aircraft’s video link and telemetry into their field of view, while simultaneously keeping the aircraft in sight.

Virtual Reality (VR)

A person employing a VR system blocks out the real world, in favor of a computer-generated simulation. VR is a burgeoning sector of the computer gaming industry, allowing players to immerse themselves in fantasy worlds and use the movement of their entire body as a game controller. This technology has also found applications in fields as diverse as architecture and urban design, healthcare, occupational health and safety, education and many others.

VR has been used to create tours of inaccessible locations, such as the International Space Station or ancient cities that have long since fallen into ruin, providing a lifelike experience for virtual visitors who could otherwise never see them.

The first VR experiences were created by artists in the 1970s, using powerful computers made available by the Jet Propulsion Laboratory and the California Institute of Technology in Pasadena. One challenge that the industry has yet to address is how to prevent VR users from looking like world-class dweebs.

Mixed Reality

Sharing elements of both AR and VR, mixed reality allows its users to perceive their actual surroundings through a transparent screen. However, the mixed-reality system uses this screen to display a virtual object anchored at a specific location in the real world. Combined with simultaneous localization and mapping (SLAM) technology, mixed reality allows multiple individuals in the same physical space to see the same virtual object, each from their own perspective.

One use case might involve a group of architects working together on a new building design. The design exists only as a virtual 3D object, perceived to be displayed on a real conference table that they have all gathered around. The participants are able to walk around the model, examining it from different sides and exchange comments and ideas with their peers.

The best-known mixed reality system currently available is the Microsoft HoloLens, first released in 2016. It borrowed its tracking technology from the Kinect module produced for the Xbox gaming system.

Mixed reality systems allow virtual objects to be embedded in the real world, with each individual user perceiving the object from their own perspective—creating a potent tool for collaboration. (Photo courtesy of Hoshinim)

Text & photos by Patrick Sherman

Patrick Sherman is a full-time UAS instructor at the Embry-Riddle Aeronautical University Worldwide Campus Department of Flight.

After reading this article I feel that the use of both AR and VR would help out tremendously for UAS pilots by elimenating the need to be forced to look away from the aircraft preventing most UAS failures. Good read

Well I’m pretty new to this. But it all makes a lot of sense I believe that people are gonna do what they want to do. But as for me y’all or they are experts for a reason so if u say VR or AR or what ever acronym it is I’m with y’all safety of what’s around you. Almost like driving you can be a offensive driver or look out for what’s around you and be a defensive driver I choose the later. Awesome article thank you